Foundation Models For Atoms, Not Bits

Twitter isn’t short on examples of large language models like ChatGPT doing extraordinary things - rewriting blog posts, solving maths problems, even writing code and poetry. As a result, some people are calling them "Foundation Models" and predicting that they'll be used in a wide range of products.

As someone who uses AI to explore new materials (think semiconductors, carbon capture, and the like), I’m especially excited about the possibilities here. It's worth noting that while computer-aided design has revolutionized many fields, materials science has remained largely resistant to these kinds of advances. We don’t, for example, design new batteries on a computer the way we design an aeroplane wing. But what if a foundation model could change that? Perhaps it could help us design new computer chips, batteries, and climate technologies at an unprecedented pace.

Of course, this is easier said than done. Language models like ChatGPT are designed to model languages, not the physical world. They can't predict the results of physics simulations, for instance - try asking GPT-4 to simulate three particles according to Newtonian physics, and you'll get an incorrect answer (and one that takes much longer than it should to produce).

So what's the solution? We should take the idea of "foundation modeling" - using large generative models trained on lots of data - and adapt it to scientific computing. For materials science, this could mean a foundation model that could be used to simulate a wide range of materials with high fidelity.

As a generative model, it may also be capable of ‘inverse design’ - as ChatGPT can generate a poem with a particular rhyming scheme, generating new materials based on desired properties, such as conductivity, or hardness, as well as optimising existing materials to improve their performance.

This approach has the potential to revolutionize materials science. Rather than relying on trial-and-error experimentation, scientists would use a foundation model to create materials that meet their specific needs. With the ability to simulate a wide range of materials, a foundation model for materials science could open up new possibilities for discovery and innovation.

The major breakthroughs in foundation modelling over the past year have broadly been the result of pairing a scaleable, reliable model architecture with the correct generative modelling task. Once these have been identified, they must then be engineered to an exacting standard. Building a foundation model for materials science requires finding the right architecture for the job, as well as the right generative modeling task.

For large language models, this was pairing the transformer architecture with the task of predicting the next word in a sequence. A huge variety of knowledge about the world can be encoded in this way - and transformers have a very well documented ability to continue to absorb as much data as you can provide.

For images, it was pairing convolutional neural networks (CNNs) with diffusion modelling. For reasons that are yet to be fully understood, CNNs perform extraordinarily well when trained with a denoising diffusion loss.

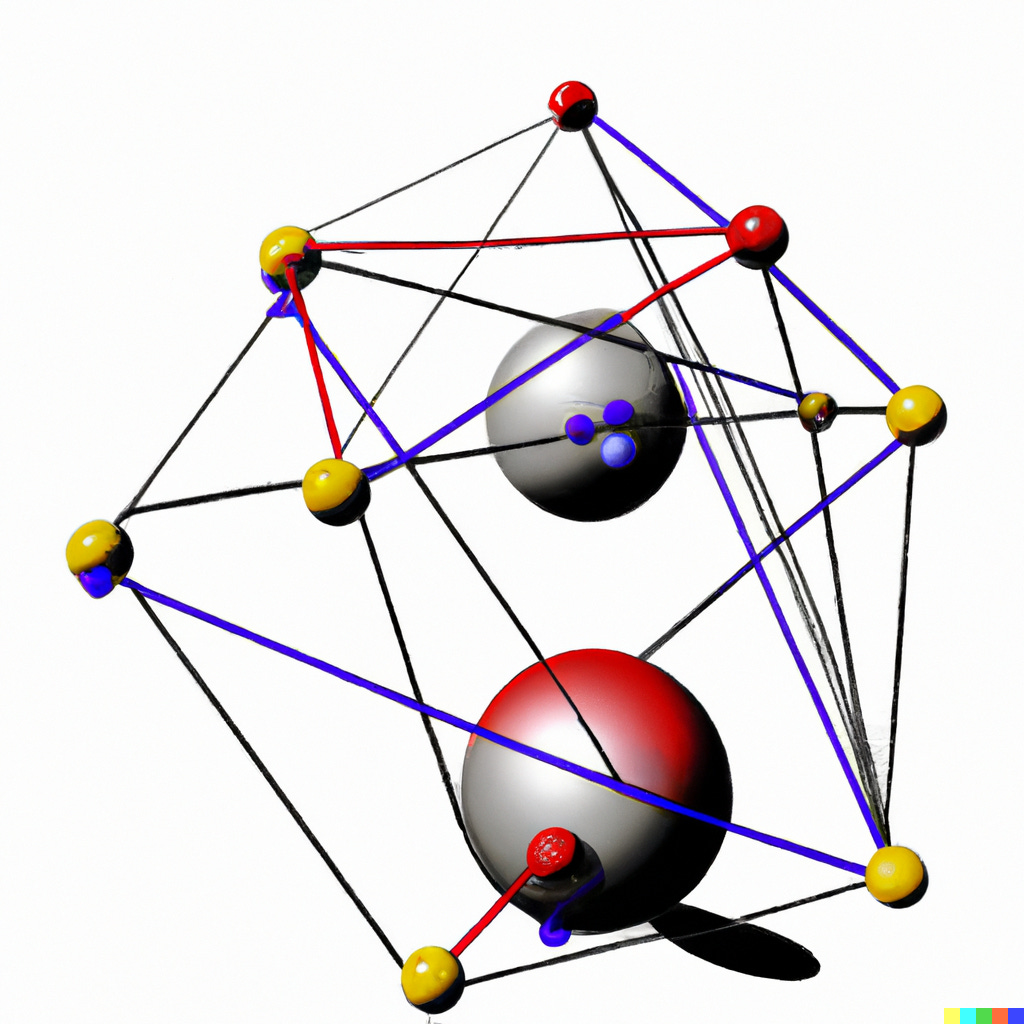

A combination that would result in a breakthrough is starting to emerge for materials science. Graph neural networks (GNNs) seem to be the correct architecture for materials science, but we have not had that ‘break out’ moment where, as we add more data, performance keeps getting better. Diffusion models have some nice properties, but will surely need adapting to be more physically informed if they're going to be used for generative modelling in materials science. And then, the engineering is different for graphs and will need innovation and dedication.

But even once we have the right architecture and task, there's still a lot of work to be done. The bar for transformative impact in materials science is much higher than it is in other fields. A foundation model for materials science will need to be able to design molecules and materials that actually work in the lab, not just perform well on computational benchmarks. Surely this is a more worthwhile thing to work on for startups than thin layers on top of OpenAI APIs.

Many of the grand challenges in AI have fallen in recent years, faster than certainly I expected. The challenges laid out above are certainly very difficult, but I believe such a system could be achieved with the right team and dedication. If we can do that, we could meaningfully accelerate scientific progress in atoms, not just bits.